Information obtained through functional magnetic resonance imaging (fMRI) measurements of whole-brain activity can be used to create feasible, efficient, and fair solutions

Information obtained through functional magnetic resonance imaging (fMRI) measurements of whole-brain activity can be used to create feasible, efficient, and fair solutions to one of the stickiest dilemmas in economics, the public goods free-rider problem—long thought to be unsolvable, according to economists and neuroscientists from the California Institute of Technology (Caltech).

This is one of the first-ever applications of neurotechnology to real-life economic problems, the researchers note. "We have shown that by applying tools from neuroscience to the public-goods problem, we can get solutions that are significantly better than those that can be obtained without brain data," says Antonio Rangel, associate professor of economics at Caltech and the paper's principal investigator.The paper describing their work was published today in the online edition of the journal Science, called Science Express.

Examples of public goods range from healthcare, education, and national defense to the weight room or heated pool that your condominium board decides to purchase. But how does the government or your condo board decide which public goods to spend its limited resources on? And how do these powers decide the best way to share the costs?

"In order to make the decision optimally and fairly," says Rangel, "a group needs to know how much everybody is willing to pay for the public good. This information is needed to know if the public good should be purchased and, in an ideal arrangement, how to split the costs in a fair way."

In such an ideal arrangement, someone who swims every day should be willing to pay more for a pool than someone who hardly ever swims. Likewise, someone who has kids in public school should have more of her taxes put toward education.

But providing public goods optimally and fairly is difficult, Rangel notes, because the group leadership doesn't have the necessary information. And when people are asked how much they value a particular public good—with that value measured in terms of how many of their own tax dollars, for instance, they'd be willing to put into it—their tendency is to lowball.

In other words, he says, "There's an incentive for you to lie about how much the good is worth to you."

In fact, for decades it's been assumed that there is no way to give people an incentive to be honest about the value they place on public goods while maintaining the fairness of the arrangement.

"But this result assumed that the group's leadership does not have direct information about people's valuations," says Rangel. "That's something that neurotechnology has now made feasible."

And so Rangel, along with Caltech graduate student Ian Krajbich and their colleagues, set out to apply neurotechnology to the public-goods problem.

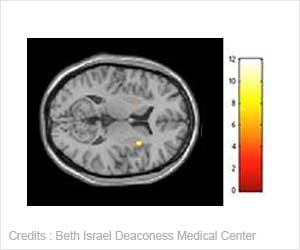

In their series of experiments, the scientists tried to determine whether functional magnetic resonance imaging (fMRI) could allow them to construct informative measures of the value a person assigns to one or another public good. Once they'd determined that fMRI images—analyzed using pattern-classification techniques—can confer at least some information (albeit "noisy" and imprecise) about what a person values, they went on to test whether that information could help them solve the free-rider problem.

They did this by setting up a classic economic experiment, in which subjects would be rewarded (paid) based on the values they were assigned for an abstract public good.

As part of this experiment, volunteers were divided up into groups. "The entire group had to decide whether or not to spend their money purchasing a good from us," Rangel explains. "The good would cost a fixed amount of money to the group, but everybody would have a different benefit from it."

The subjects were asked to reveal how much they valued the good. The twist? Their brains were being imaged via fMRI as they made their decision. If there was a match between their decision and the value detected by the fMRI, they paid a lower tax than if there was a mismatch. It was, therefore, in all subjects' best interest to reveal how they truly valued a good; by doing so, they would on average pay a lower tax than if they lied.

"The rules of the experiment are such that if you tell the truth," notes Krajbich, who is the first author on the Science paper, "your expected tax will never exceed your benefit from the good."

In fact, the more cooperative subjects are when undergoing this entirely voluntary scanning procedure, "the more accurate the signal is," Krajbich says. "And that means the less likely they are to pay an inappropriate tax."

This changes the whole free-rider scenario, notes Rangel. "Now, given what we can do with the fMRI," he says, "everybody's best strategy in assigning value to a public good is to tell the truth, regardless of what you think everyone else in the group is doing."

And tell the truth they did—98 percent of the time, once the rules of the game had been established and participants realized what would happen if they lied. In this experiment, there is no free ride, and thus no free-rider problem.

"If I know something about your values, I can give you an incentive to be truthful by penalizing you when I think you are lying," says Rangel.

While the readings do give the researchers insight into the value subjects might assign to a particular public good, thus allowing them to know when those subjects are being dishonest about the amount they'd be willing to pay toward that good, Krajbich emphasizes that this is not actually a lie-detector test.

"It's not about detecting lies," he says. "It's about detecting values—and then comparing them to what the subjects say their values are."

"It's a socially desirable arrangement," adds Rangel. "No one is hurt by it, and we give people an incentive to cooperate with it and reveal the truth."

"There is mind reading going on here that can be put to good use," he says. "In the end, you get a good produced that has a high value for you."

From a scientific point of view, says Rangel, these experiments break new ground. "This is a powerful proof of concept of this technology; it shows that this is feasible and that it could have significant social gains."

And this is only the beginning. "The application of neural technologies to these sorts of problems can generate a quantum leap improvement in the solutions we can bring to them," he says.

Indeed, Rangel says, it is possible to imagine a future in which, instead of a vote on a proposition to fund a new highway, this technology is used to scan a random sample of the people who would benefit from the highway to see whether it's really worth the investment. "It would be an interesting alternative way to decide where to spend the government's money," he notes.

Source-Eurekalert

RAS

MEDINDIA

MEDINDIA

Email

Email