A collaborative team of researchers from Brown University and the University of Cincinnati have found that a front portion of the brain, which handles decision-making, also helps decipher

It has been found by a collaborative team of researchers from Brown University and the University of Cincinnati that the front portion of the brain helps decipher different phonetic sounds. This is the same portion that handles decision-making.

Writing about their findings in the journal Psychological Science, the researchers have revealed that this section of the brain is called the left inferior frontal sulcus.They say that this section treats different pronunciations of the same speech sound-such as a 'd' sound-the same way.

The researchers say that in determining this, they have solved a mystery.

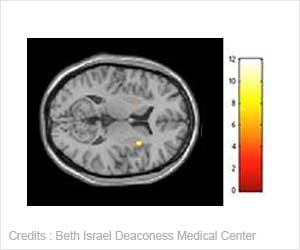

MRI studies showed that test subjects reacted to different sounds - ta and da, for example - but appeared to recognize the same sound even when pronounced with slight variations. These five sounds are the same, but the fifth (right) has a slightly different pronunciation.

"No two pronunciations of the same speech sound are exactly alike. Listeners have to figure out whether these two different pronunciations are the same speech sound such as a 'd' or two different sounds such as a 'd' sound and a 't' sound," said Emily Myers, assistant professor (research) of cognitive and linguistic sciences at Brown University.

Lead researcher Sheila Blumstein, the Albert D. Mead Professor of Cognitive and Linguistic Sciences at Brown, said that the findings provided a window into how the brain processes speech.

The research team studied 13 women and five men, ages 19 to 29. All were brought into an MRI scanner at Brown University's Magnetic Resonance Facility, so that the researchers could measure blood flow in response to different types of stimuli.

The study showed that the brain signal in the left inferior frontal sulcus changed when the final sound was a different one. But if the final sound was only a different pronunciation of the same sound, the brain's response remained steady.

According to Myers and Blumstein, the study matters in the bid to understand language and speaking and how the brain is able to understand certain sounds and pronunciations.

"What these results suggest is that [the left inferior frontal sulcus] is a shared resource used for both language and non-language categorization," Blumbstein said.

Source-ANI

TAN

MEDINDIA

MEDINDIA

Email

Email