In some stunning news, participants in a study to test the function of a robotic prosthetic arm were unhappy with the results because it was 'too easy.'

"If we're too challenged, we get angry and frustrated. But if we aren't challenged enough, we get bored," said John Bricout.

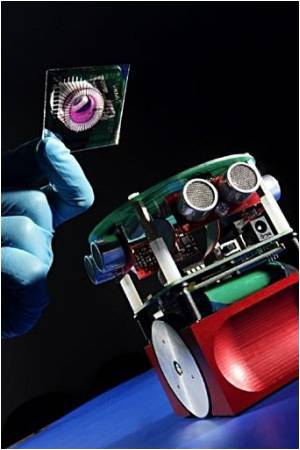

The computer program is based on how the human eye sees. A touch screen, computer mouse, joystick or voice command sends the arm into action. Then sensors mounted on the arm see an object, gather information and relay it to the computer, which completes the calculations necessary to move the arm and retrieve the object.

Assistant Professor Aman Behal said that the key was to produce a hybrid model that could be used in a customized manner according to the individual's ease.

"You have no idea what it is like to want to do something as simple as scratching your nose and have to rely on someone else to do it for you," said Bob Melia, a quadriplegic who advised the UCF team.

"I see this device as someday giving people more freedom to do a lot more things, from getting their own bowl of cereal in the morning to scratching their nose anytime they want."

Advertisement